Sometimes there are few pages on a website that site owners might not want search engine crawlers to access as these don’t add much value and might overload your site with requests from the crawlers.

It’s an important aspect of technical SEO for your website.

If you’ve been a WordPress user, you would know that /wp-admin/ pages don’t have to be crawled as they don’t provide any value to the visitors. These are meant for site owners and developers. So allowing such pages to be crawled don’t make much sense.

Websites even put pages like shopping cart or checkout pages in their robots.txt file to prevent them from being crawled.

So what is Robots.txt?

A Robots.txt file simply tells the search engine crawlers (for e.g. Googlebot) which URLs they are allowed to access on the site.

Keep in mind that robots.txt is not a mechanism to keep your page out of Google. If you want to remove it from Google Search completely, you should use a noindex tag instead.

Note: If another page links to your page, it can still be indexed by Google without the crawlers having visited the page. So if you want your page to be out of the Google Search results, putting it in robots.txt wouldn’t work. You’ll have to use noindex tag or password protect the pages in that case.

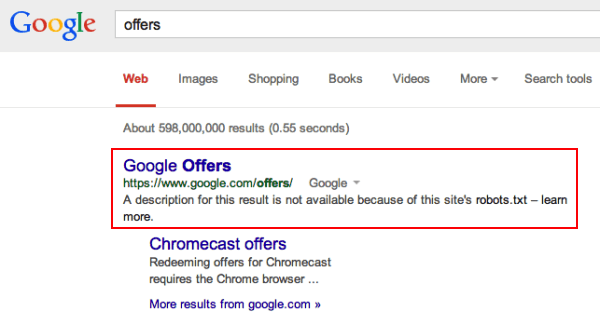

This is how a page will appear in search results if you’ve put it in the robots.txt file but Google has still indexed it:

The listing will not show any description of the page in the search results.

Understanding the Robots.txt file

A robots.txt file consists of one or more rules. These rules can either allow or disallow access for a crawler to a given path on the domain.

Let’s look at a simple robots.txt with 2 simple rules to understand the different directives:

User-agent: Googlebot

Disallow: /noaccess/

User-agent: *

Allow: /

Sitemap: http://www.example.com/sitemap.xml1. User-Agent

This directive specifies which search engine crawler the rule applies to. This is the first line for any rule groups. Here’s a list of 10 most popular web crawlers.

So if you look at the example above, the first line applies the disallow rule to only Googlebot, which is Google’s main crawler.

If you wish to apply the rule to all crawlers, you can use an asterisk (*), which will match all crawlers except the Adsbot that has to be named explicitly.

2. Disallow

Any directory or a single page that you don’t want the search engines to crawl can be inserted here. If it’s a single page that you want to disallow, it should refer to the full page name. It should start with a slash “/” and if it’s a directory then it should end with a slash “/”.

So, in the above example, because we have put /noaccess/ in disallow, Googlebot would not crawl any page that starts with http://www.example.com/noaccess/.

3. Allow

Any directory or a single page that you want the search engines to crawl on the domain. It can also be used to override a disallow directive. For instance, if I have the following in my robots.txt file:

User-agent: *

Disallow: /noaccess/

Allow: /noaccess/subpage

Sitemap: http://www.example.com/sitemap.xmlIn this case:

- All Search Engine Crawlers/User-Agents (because of the use of asterisk) are being targeted.

- Any page that starts with http://www.example.com/noaccess/ is disallowed.

- The page http://www.example.com/noaccess/subpage can be crawled since the allow directive overrides the disallow directive for that directory.

4. Sitemap

This refers to the location of a sitemap for this website. It should be a fully-qualified URL. The sitemap directive does not support the asterisk (*) wildcard.

Building your own Robots.txt File

You can use almost any text editor to create a robots.txt file like NotePad, TextEdit etc. If you’re using WordPress, Wix, Shopify or any other CMS, chances are that your robots.txt file is automatically generated and you might not be able to upload a custom file. However, you can edit the ones that are generated automatically.

For WordPress, the best plugin to use to automatically generate a robots.txt file and place it in the right location is Yoast SEO.

To see if your website already has a robots.txt file, just type in /robots.txt after your site root. For instance, my robots.txt file would be accessible from https://www.blog.nimitkapoor.com/robots.txt.

To build or update your robots.txt file, you must first understand how path value works. Reputed Bots respect few wildcards for path values:

*designates 0 or more instances of any valid character.$designates the end of the URL.

Let’s look at a few examples:

| Example Path Matches | |

|---|---|

/ | Matches the root and any lower level URL. |

/$ | Matches only the root. Any lower level URL is allowed for crawling. |

/baseball | Matches any path that starts with /baseball. Note that the matching is case-sensitive.✅ Matches: /baseball❌ Doesn’t Match: /Baseball |

/baseball/ | Matches anything in the /baseball/ folder. ✅ Matches: /baseball/bats❌ Doesn’t Match: /baseball |

/*.php | Matches any path that contains .php.✅ Matches: /baseball.php❌ Doesn’t Match: windows.PHP |

/*.php$ | Matches any path that ends with .php.✅ Matches: /baseball.php❌ Doesn’t Match: /baseball/filename.php?parameters |

Robots.txt File (Amazon.com.au Example)

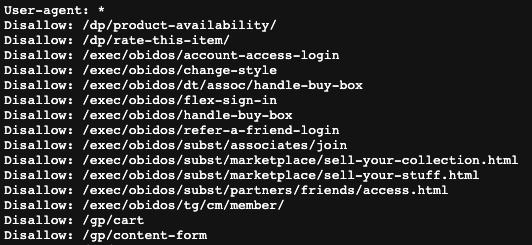

Let’s look at Amazon’s Robots.txt file:

(Just a snippet of the first few lines of the file)

Amazon has instructed all user-agents (by using *) to not crawl pages starting with /gp/cart (second last line in the above image)

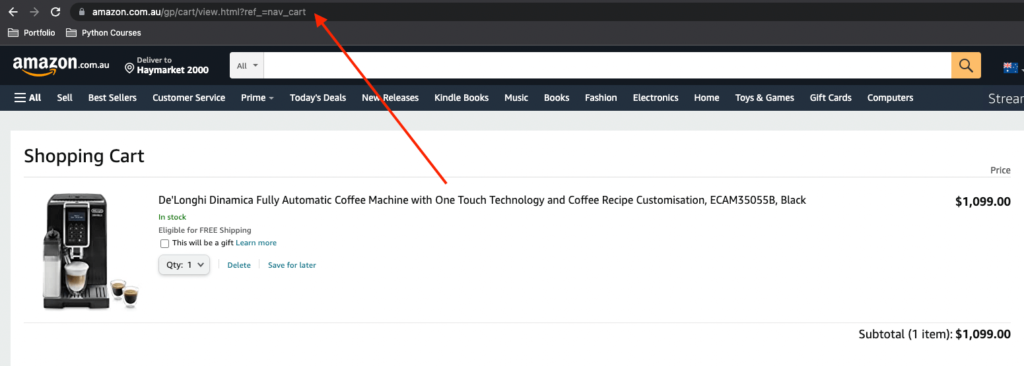

Do you think the following page (my shopping cart) will be crawled by GoogleBot?

The URL of the page is: https://www.amazon.com.au/gp/cart/view.html?ref_=nav_cart

Answer: No

Editing Robots.txt File (Blog Example)

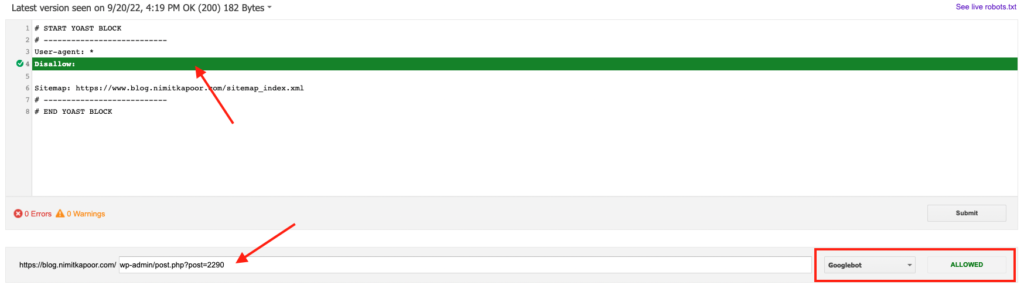

Let’s take my blog as an example. I’m using Yoast SEO Plugin on WordPress for my Robots.txt file. This is what my robots.txt file currently looks:

# START YOAST BLOCK

# ---------------------------

User-agent: *

Disallow:

Sitemap: https://www.blog.nimitkapoor.com/sitemap_index.xml

# ---------------------------

# END YOAST BLOCKAs you can see, currently I’ve allowed crawling of the entire site. Lets say I don’t want any search engine crawlers to crawl /wp-admin/ pages.

This is what my Robots.txt should look like to enable that:

# START YOAST BLOCK

# ---------------------------

User-agent: *

Disallow:/wp-admin/

Sitemap: https://www.blog.nimitkapoor.com/sitemap_index.xml

# ---------------------------

# END YOAST BLOCKBut before going ahead and saving these changes, I can test my paths using Google’s Robots.txt tester.

Before the changes, you can see that the post pages inside wp-admin is allowed (Screenshot below):

But after adding the Disallow: /wp-admin/ directive, the same URL is blocked.

Once I’ve tested that the directive works correctly, I can simply go ahead and change it in the Yoast SEO Plugin settings.

Go to Yoast SEO -> Tools -> File Editor and update the robots.txt file there.

Limitations of Robots.txt

- robots.txt directives may not be supported by all search engines.

While GoogleBot and other respectable crawlers adhere to the robots.txt directives, there are some crawlers that do not. So, if you have some private/sensitive information on your site, it’s best to password-protect it. - Different crawlers interpret syntax differently.

Not all crawlers understand the same instructions. Some of the web crawlers might not understand certain instructions. - A page that’s disallowed in robots.txt can still be indexed if linked to from other sites.

This is extremely important – if you don’t want a page to show in search results at all, then you should include a noindex meta tag or password protect the files on your server.